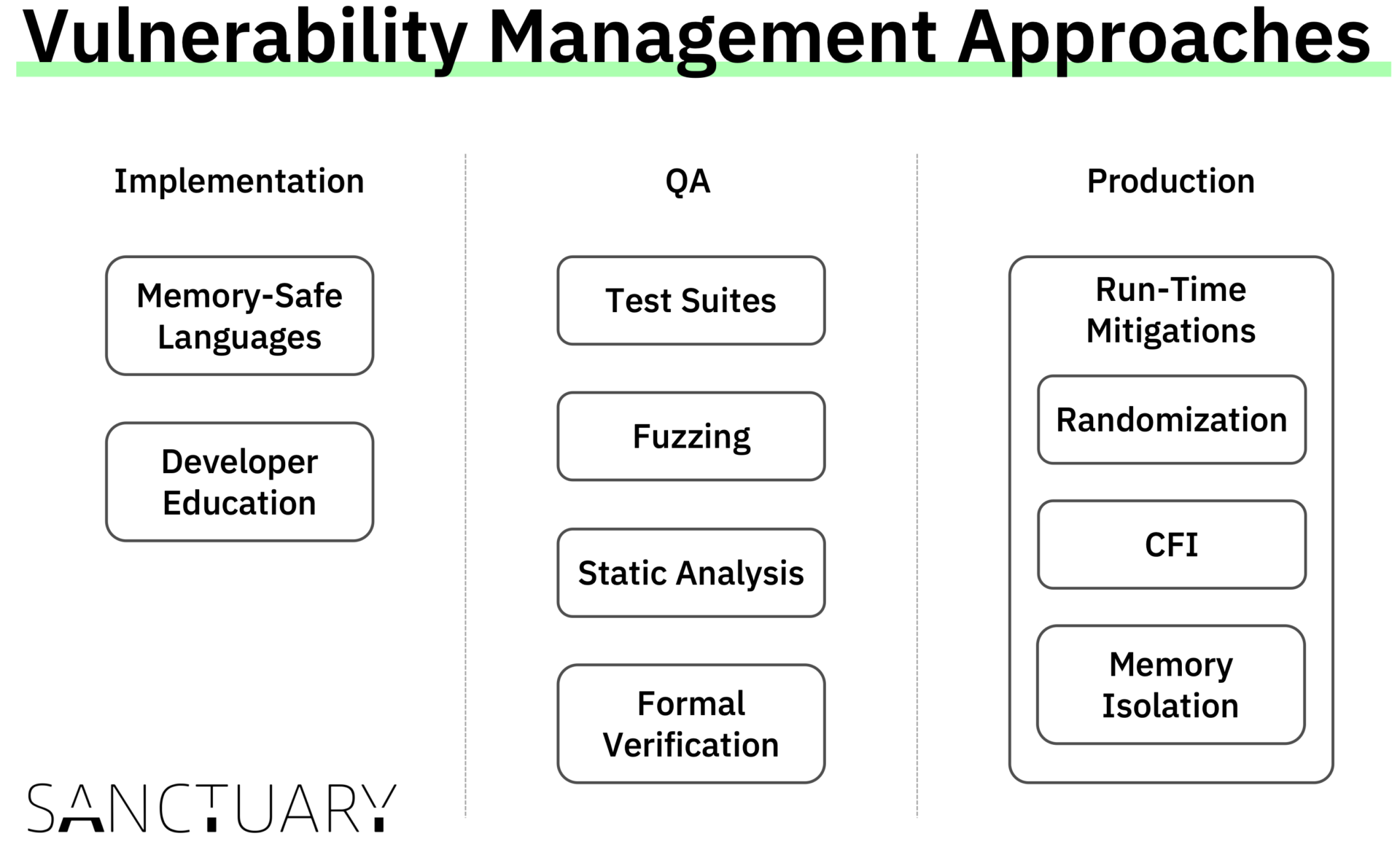

Managing run-time vulnerabilities is a rather nasty problem of modern software engineering. In this post, we will examine a number of approaches that have been proposed and implemented by the security community. Some approaches aim at preventing the existence of bugs, some others aim at finding the bugs before the code is deployed, and yet others aim at making existing bugs harder to exploit in the field.

Preventing Memory Corruption

The most radical approach is to try and prevent run-time vulnerabilities from ever being introduced in the application. As an example, switching from a memory-unsafe language like C (which places the burden of memory management on the programmer) to a memory-safe language like Rust or Python inherently prevents a number of classes of memory-corruption vulnerabilities. Similar effects, although harder to prove, can be achieved with developer education and strict coding conventions. While valuable, these approaches do not help projects with existing codebases written in memory-unsafe languages.Finding Bugs Before Deployment

A second approach for managing run-time vulnerabilities is to acknowledge the likely presence of memory-corruption vulnerabilities in a codebase and to try to find them before deployment to a production environment. This can be done using a number of techniques:- A comprehensive test suite can be useful to ensure that the code performs in accordance with the specification. However, human-written test suites tend to focus on intended functionality, while vulnerabilities are by definition unintended.

- Automated software testing, or fuzzing, can be used to automatically construct interesting test cases and explore any reachable branch of the program. Fuzzing is a very effective technique to find bugs and we use it extensively at SANCTUARY to uncover complex bugs in our software during development. However, it does not give any guarantee about the absence of further bugs in the program.

- Static analysis tools can detect and report a number of coding patterns that go against the best practices and often lead to run-time vulnerabilities. One popular tool is the Clang Static Analyzer, which can easily be added to an LLVM-based compilation flow, and is also one approach that we use at SANCTUARY to ensure high code quality. While static analysis tools help to ensure a good code quality, they also raise false warnings frequently.

- Formal methods can be used to prove that the program behaves according to a specification. This is a very powerful guarantee; however, formally verifying the correctness of a program can require a significant amount of human effort. As a result, it scales poorly to complex software. Applying formal methods to legacy code is even harder, and often a compromise has to be made on which parts should get verified.

Mitigating the Ones That Slipped Through

The last approach for managing run-time vulnerabilities acknowledges that, even after thorough testing, there are likely still memory-corruption vulnerabilities that have not been discovered. Hence, the idea is to put in place mitigations that aim at making vulnerabilities harder to exploit. Run-time mitigations can be categorized according to their operating principle:- Defenses based on software diversity (or randomization) automatically change some detail of the application, which the attacker needs to know in order to mount the attack. The application’s functionality is unchanged, but the attacker now lacks some information which is required for the attack. As an example, a common mitigation is to randomize the location of the application’s code in memory. Randomization defenses are conceptually simple and generally enjoy good compatibility and low performance overhead. Still, if the adversary is able to guess or somehow disclose the randomization secret, the mitigation stops being effective. Hence, at SANCTUARY, we combine randomization with the two other mitigations mentioned below.

- Defenses based on integrity checks instrument the application in order to enforce some security property. A prominent example is Control-Flow Integrity (CFI), which ensures that the actual control flow of a program complies with a control-flow graph (CFG) which can be generated from the program’s source. While CFI gives deterministic security guarantees, generating accurate CFGs is very challenging, leading to the necessity of using approximate CFGs, which reduces the protection accuracy. Moreover, CFI does not protect against attacks that do not deviate from the theoretical CFG; these include simple attacks that just read some data from memory and more complex data-only attacks.

- Defenses based on memory isolation aim at partitioning the application into smaller components and enforcing barriers between them, so that a vulnerability in a component cannot be used to attack a different one. As an example, Trusted Execution Environments (TEEs) use hardware-enforced memory isolation to ensure their integrity and confidentiality. This is also the core concept of the Sanctuary Embedded Consolidation. Yet, there can still be a vulnerability inside the same component, for which we at SANCTUARY leverage the former two mitigations in combination with extensive testing.